New - Speech Characteristics as Idiographic Memory Measure

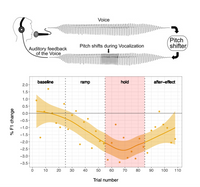

Recent work has implicated cognitive factors in sensorimotor learning during speech. Yet, the exact way in which cognition impacts sensorimotor learning is unclear. Individual differences in cognition can be quantified using model-based estimations of learning and forgetting rates. In this project, we investigate the relationship between sensorimotor learning rate during speech and memory capacities. Preliminary analyses of the data suggest that the amount of sensorimotor learning is associated to forgetting rate in a learning task

These results suggest that general, trait-like differences in cognition may be associated to individual differences in speech production.

New - Cognitive and Metacognitive Signatures of Memory Retrieval Performance in Speech Prosody

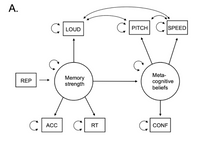

In this project, we examined which cognitive and metacognitive proxies of memory strength are present in the speech signal during spoken retrieval attempts. Participants studied vocabulary items using spoken retrieval practice. We demonstrate that it is possible to extract information about (1) the accuracy of a response and (2) a speaker’s subjective confidence in a response from the speech signal. Using structural equation modeling, we find evidence for the idea that the objective memory strength of a response is mainly reflected in the speaker's loudness, whereas the metacognitive process of judging one's certainty or doubt about a response is mainly reflected in a speakers' pitch and speaking speed. Read more about this project here.

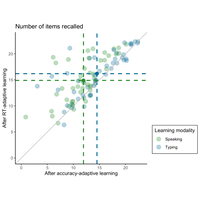

Speech-Based Adaptive Learning

The main focus of my PhD project lies on exploring possibilities for speech-based adaptive fact learning. Digital adaptive learning systems measure learning behavior and estimate and predict performance for individual learners using cognitive models of memory retrieval. They use this information to provide appropriate feedback to the learner or create optimal item repetition schedules. Recent developments in speech technology allow for the transition of typing-based systems to speech-based systems. In this paper, we show that benefits of adaptive learning generalize from typing-based learning to speech-based learning. This preprint shows that automatic speech recognition technology can be used to effectively replace typed input by spoken input in adaptive learning systems.

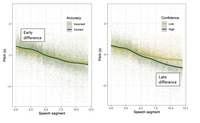

Improving Cognitive Models of Memory Retrieval using Prosodic Speech Features

In this project, we explore the possibility of improving models of memory retrieval by exploiting information present in speech signals. We examine prosodic speech features, such as intonation, rhythm and stress. We demonstrate that prosodic speech features are associated to response times and accuracy scores for retrieval attempts, and that they can be used to predict the extent to which a learner has successfully memorized an item. You can read more about this project here and here.

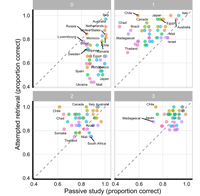

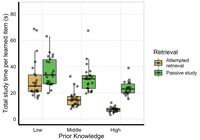

Test Before Study: Attempted Retrieval Benefits in Applied Learning Systems

Several recent studies indicate that retrieval attempts promote learning. Even when a retrieval attempt fails (e.g., because the learner has not encountered the item before), the act of attempting to retrieve something from memory by itself benefits subsequent learning. In this project, we examine if attempted retrieval promotes learning efficiency in real-world situations, where learners have prior knowledge about materials and where items are repeated multiple times. We show that initial attempted retrieval indeed results in higher retrieval accuracy on later repetitions of the same item, regardless of the success of the initial retrieval attempt. Furthermore, we show that testing before study is an efficient overall learning strategy.

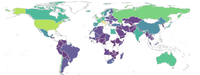

Prior Knowledge Norms for Naming Country Outlines: an Open Stimulus Set

Paired-associate stimuli are an important tool in learning and memory research. In cognitive psychology, many studies use materials of which the learners are expected to have little to no prior knowledge. Despite their theoretical usefulness, conclusions from these studies are difficult to generalize to real-world learning contexts, where learners can be expected to have varying degrees of prior knowledge. Therefore, we here present a database containing an ecologically valid stimulus set with 112 country outline-name pairs, and report response times and prior knowledge for these items in 285 largely Western European participants. The database can be accessed here. The paper can be found here.

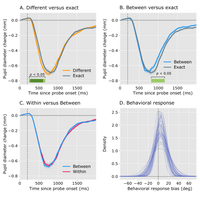

Interactions Between Visual Working Memory, Attention and Color Categories

Recent studies have found that visual working memory (VWM) for color shows a categorical bias: observers typically remember colors as more prototypical to the category they belong to than they actually are. In this project, we further examine color-category effects on VWM using pupillometry. Our results indicate that pupil constriction to colored probes reflects both visual adaptation and VWM content, but, unlike behavioral measures, is not notably affected by color categories.